The Token Economy: Cost Analysis for LLM APIs

As AI adoption accelerates, understanding token economics becomes critical for business sustainability. Whether you're building a chatbot, content generation platform, or AI-powered application, token costs can quickly spiral out of control. This guide will help you navigate the token economy and optimize your spending.

Understanding Token Pricing

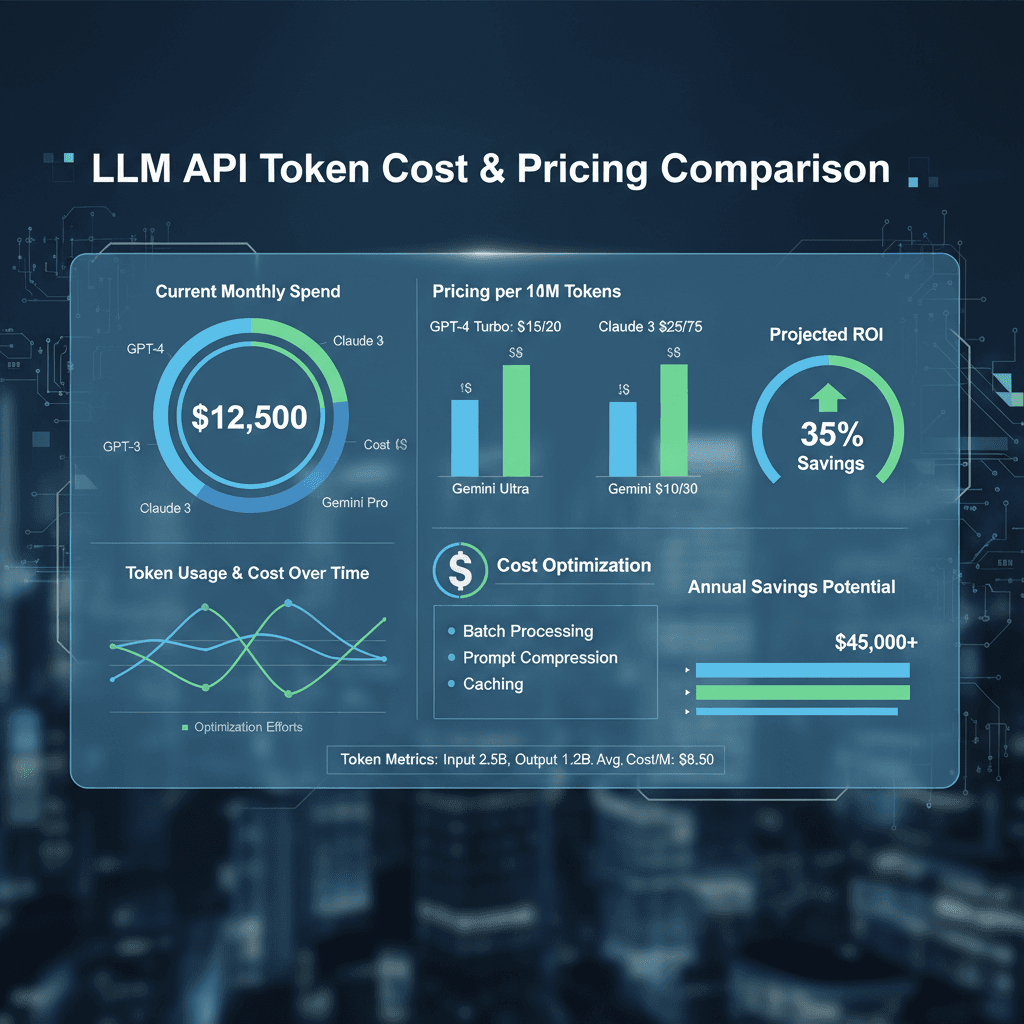

Most LLM providers use a token-based pricing model with different rates for input and output tokens. Here's how the major players price their services as of November 2024:

OpenAI Pricing

- GPT-4 Turbo: $10/1M input tokens, $30/1M output tokens

- GPT-4: $30/1M input, $60/1M output (more expensive, deprecated)

- GPT-3.5 Turbo: $0.50/1M input, $1.50/1M output

- GPT-4o: $5/1M input, $15/1M output (optimal efficiency)

Anthropic Claude Pricing

- Claude 3 Opus: $15/1M input, $75/1M output

- Claude 3 Sonnet: $3/1M input, $15/1M output

- Claude 3 Haiku: $0.25/1M input, $1.25/1M output

Google Gemini Pricing

- Gemini 1.5 Pro: $7/1M input (first 128K tokens), $21/1M output

- Gemini 1.5 Flash: $0.075/1M input, $0.3/1M output (highly competitive)

The Hidden Costs Beyond Token Prices

Token pricing alone doesn't tell the complete story. Consider these additional factors:

1. System Prompts and Context

Every API call includes your system prompt, which adds to token consumption. A 200-token system prompt repeated across 10,000 daily queries = 2 million tokens per day, or ~$15/day in direct costs.

Optimization tip: Use prompt compression techniques. Reduce your system prompt from 300 tokens to 150 tokens and save ~$900/month at 10K requests/day.

2. Output Generation Costs

Output tokens are typically more expensive (2-3x input cost). A model generating 500 tokens per request costs significantly more than one generating 200 tokens, even if input is identical.

3. Error Handling and Retries

API failures requiring retries can double your costs invisibly. Implement proper error handling, exponential backoff, and caching to minimize wasted tokens.

4. Model-Specific Token Counts

The same text tokenizes differently across models. GPT-3.5 might tokenize as 100 tokens while Claude uses 110 tokens. This 10% difference compounds across millions of requests.

Real-World Cost Scenarios

Scenario 1: Customer Support Chatbot

Setup:

- System prompt: 250 tokens

- Average customer query: 100 tokens

- Average response: 300 tokens

- Daily volume: 5,000 conversations

Daily token usage: 5,000 × (250 + 100 + 300) = 3.25M tokens

Using GPT-4o: (250 + 100) × 5,000 × $5/1M + 300 × 5,000 × $15/1M = $24.25/day

Monthly cost: ~$728/month

Scenario 2: Content Generation Platform

Setup:

- System prompt: 400 tokens

- User prompt: 150 tokens

- Generated content: 1,500 tokens

- Daily volume: 2,000 requests

Daily token usage: 2,000 × (400 + 150 + 1,500) = 4.1M tokens

Using Claude 3 Haiku: (400 + 150) × 2,000 × $0.25/1M + 1,500 × 2,000 × $1.25/1M = $3.88/day

Monthly cost: ~$116/month

Cost Optimization Strategies

1. Model Selection Strategy

Don't default to the most powerful (and expensive) model. Create a routing strategy:

- Simple queries: Use GPT-3.5 Turbo or Haiku (10x cheaper)

- Medium complexity: Use GPT-4o or Sonnet (good balance)

- Complex reasoning: Use GPT-4 Turbo or Opus (only when necessary)

2. Prompt Engineering for Cost

Every token in your system prompt costs money on every request:

- Remove unnecessary examples from few-shot prompts

- Use concise language without sacrificing clarity

- Move static context to retrieval systems (RAG) instead of prompts

- Use placeholders instead of inline data when possible

3. Output Control

Since output tokens are more expensive, control generation:

- Set max_tokens to reasonable limits

- Use JSON mode to ensure structured output (avoid verbose explanations)

- Request summaries instead of full responses

- Implement streaming to stop generation early

4. Caching and Batch Processing

Process similar requests together and cache results when possible. OpenAI's prompt caching reduces costs by 90% on repeated context.

5. Use Smaller Open-Source Models

For many tasks, fine-tuned open-source models (Llama, Mistral, Qwen) are more cost-effective when self-hosted. Initial investment pays off at scale.

Token Economics Forecasting

To predict your costs, use this formula:

Daily Cost = (Input Tokens × Input Price) + (Output Tokens × Output Price)

Example: 10,000 requests/day

Average input: 200 tokens × 10,000 = 2M tokens/day

Average output: 300 tokens × 10,000 = 3M tokens/day

Using GPT-4o: (2M × $5) + (3M × $15) = $55,000/month

Monitoring and Alerts

Set up monitoring to catch cost overruns:

- Track API usage daily via provider dashboards

- Set budget alerts in your cloud provider

- Analyze token usage patterns to detect anomalies

- Review expensive queries and optimize them

Conclusion

Token economics are fundamental to the sustainability of LLM-powered applications. By understanding pricing structures, optimizing prompts, selecting appropriate models, and implementing cost controls, you can build profitable AI products.

Use Tiktokenizer to analyze your prompts and estimates costs before deploying. Monitor usage closely and be prepared to iterate on your architecture as your application scales.